Hi!

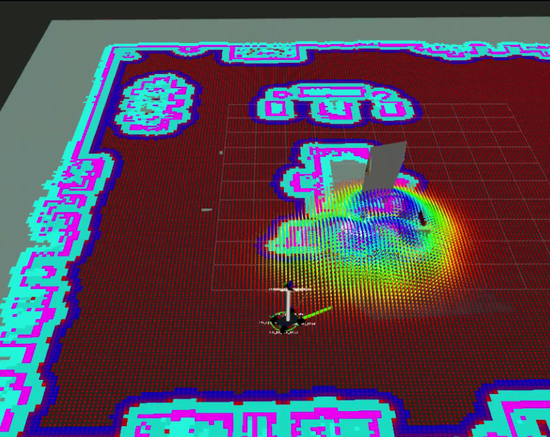

My primary research focus revolves around deep learning algorithms and their integration into robotic systems to enhance their intelligence, physical consistency, and seamless interaction with human counterparts. As a Senior Software Engineer specializing in Machine Learning and Robotics at Locus Robotics, I actively contribute to the advancement of autonomous mobile robots, enabling them to perceive their surroundings and make intelligent decisions.

I recently completed my Master of Science degree in Computer Science from the University of British Columbia, under the supervision of Ian M. Mitchell. Before enrolling at UBC, I was a research fellow at TCS Research and Innovation Labs, where I contributed to the automation of warehouse robotics under the guidance of Swagat Kumar and Rajesh Sinha. Additionally, I hold a Bachelor’s degree in Computer Science from IIIT Delhi, where I worked under the supervision of Rahul Purandare and closely collaborated with P.B. Sujit.

My experience and expertise lie in pushing the boundaries of what is possible with machine learning in the field of robotics, continuously striving to create systems that are not only autonomous but also capable of sophisticated interaction and collaboration with humans.

Interests

- Robotics (Perception + Planning)

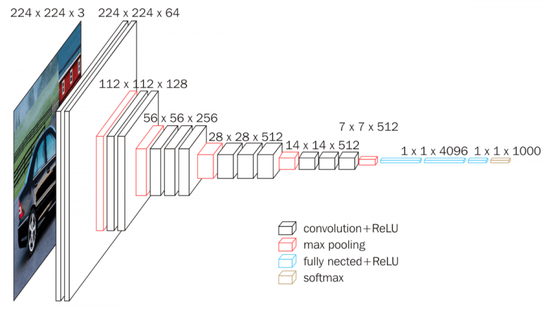

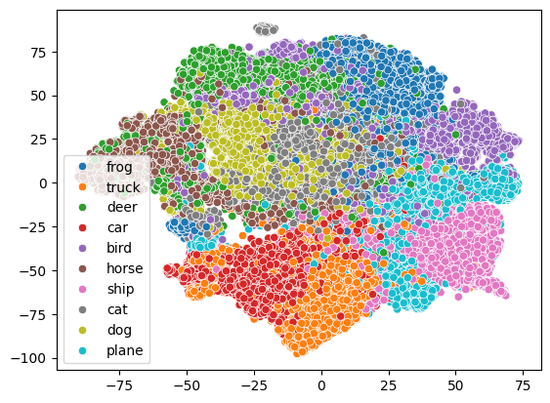

- Computer Vision

- Deep Learning for Images and Pointclouds

- Competitive Programming

Education

-

MSc in Computer Science, 2022

University of British Columbia, Vancouver

-

BTech in Computer Science Engineering, 2017

Indraprastha Institute of Information Technology, Delhi